Organized business’s knee-jerk opposition to paid sick days legislation

The Senate Committee on Health, Education, Labor, and Pensions is holding its Mother’s Day hearing today, and the main subject is paid sick leave, something every working mother needs. Sen. Tom Harkin (D-Iowa) and Rep. Rosa DeLauro (D-Conn.) have introduced a bill, the Healthy Families Act (S. 984/H.R. 1876), to mandate that every employee receive at least seven days of paid time off for illness each year. Everywhere in the civilized world, employees have the right to at least some minimum amount of paid leave when they or their children are sick or when they have to see a doctor—everywhere except in the United States.

The organized U.S. business community—represented by the Washington lobbyists and dozens of giant trade associations—fiercely opposes giving American workers this right.

Why? Because business owners don’t want to be told what to do by anybody, least of all by the government. They consider themselves entitled to force employees to choose between working while sick, taking leave without pay (if the employees are lucky enough to have the right to take unpaid leave), or being fired.

At this morning’s hearing, the business community was represented by the Society for Human Resources Management, which reflexively takes the position that workers should not have legal rights; they should not, for example, have the legal right not to be fired without just cause, they should not have the right to a minimum wage, they should not have the right to unpaid leave to care for family members, they should not have the legal right to advance notice that their office, store or factory will be shut down, etc. The SHRM position is that wages, benefits, and protections should be left to the whim of the employer, or in fancier terms, to market forces.

As usual, the SHRM witness is testifying this morning that her business treats its employees well, giving its employees with three years or more of service 20 days of paid leave. The implication is that left alone, businesses will treat employees as well as they can afford to, and everything will be for the best.

But this benign view is not true. Absent legislation, businesses will treat employees only as well as they want to, even if they can afford to do much more. Even businesses whose CEOs are paid millions of dollars a year can deny all or most of their workers any paid sick leave at all. This problem is so widespread that about 40 percent of the private-sector workforce has no right to even a single day of paid sick leave. That’s 40 million people—mostly low-wage workers who are barely scraping by—who go unpaid if they get sick or if they have to take time off to care for a sick family member.

The SHRM witness argues that a mandate is “inflexible,” and that’s true. It should be inflexible; the whole point is to guarantee a basic, minimum right to workers—most of whom are women—that they need very badly. Nothing would prevent a business from doing more, as the SHRM witness’ business already does.

SHRM’s alternative arguments against the paid sick leave legislation are altogether astonishing. SHRM’s witness argues that providing generous benefits is a competitive advantage to her company that would be lost if every business were compelled by law to provide them, ignoring that the bill’s mandate is for only seven days of paid leave, rather than the 20 days her business provides, leaving plenty of competitive advantage.

“We provide generous paid leave so that we can continue to be an employer of choice for employees and applicants in our area. What we do not want is a government-imposed paid leave mandate to take away our competitive edge over other employers.”

And finally, she argues that it is somehow degrading to be told what to do by the government:

“Organizations such as ours that are already extremely successful with flexible workplace outcomes should not be brought down to the mediocre level that regulatory approaches would be trying to get not-so-well-run companies up to achieving.”

The silliness of these arguments is pretty good evidence that the business community has no good reason (no economic reason) to oppose the Healthy Families Act. Their opposition is ideological: They own the businesses, and no one has the right to tell them (the 1 percent) how to treat their workers—not even a government of the people, by the people, and for the people.

Grasping at Chinese straws

The Commerce Department released another depressing report on the U.S. trade deficit this morning, our monthly reminder of the huge gap between globalization’s economic reality and American economic policymaking.

In March, we bought about $52 billion (28 percent) more from the rest of the world than we sold. From first quarter 2011 to first quarter 2012, the deficit on goods and services rose almost 8 percent, with China representing almost two-thirds of our non-oil deficit with the rest of the world.

Yet, over the past year or so, a drumbeat of analysis in the establishment business press has been telling us to stop worrying; our chronic trade imbalance with China will soon disappear. New York Times columnist Eduardo Porter last week summed up the happy scenario: Chinese wages and transpacific transportation costs are rising and the Chinese are allowing their currency to appreciate. The implication is that rather than exerting unpleasant political pressure on China, we should trust in the natural workings of the market and the good common sense of the Chinese leaders who “appear to understand the need for change.”

Don’t hold your breath.

Porter is correct that wages are rising in China faster than they are in the United States. But to get a perspective, check out the Bureau of Labor Statistics’ numbers on international labor costs in manufacturing, where the latest data—for 2008—is that Chinese manufacturing costs are a little over 4 percent of U.S. levels. Yes, they probably have risen since then, but the gap is still immense and will clearly not be closed anytime soon.

Moreover, the narrowing of the gap may have as much to do with U.S. workers getting less as Chinese workers getting more. The corporate poster boy for looking at the bright side is General Electric, which has moved some production of a few heavy appliances back to the U.S. from China. What the poster leaves out is that GE workers who used to make $22 an hour are now making $13.

It is also true that rising fuel costs are making it more expensive to import large, heavy products from across the Pacific. But that hardly means that production will move back to the U.S. Thanks to the North American Free Trade Agreement, multinational producers of big appliances and autos and parts who find importing from China too expensive, are moving to Mexico where labor costs are 18 percent of what they’d pay in the U.S.

Finally, Porter writes that the Chinese strategy of manipulating their currency to keep their exports cheap and imports expensive “may be turning the corner.” He notes that the Chinese, while they don’t want to appear caving to American pressure, have quietly allowed the renminbi to appreciate 40 percent against the dollar since 2005.

Just so. And over that time our trade deficit with China has grown by over 45 percent, suggesting how large China’s comparative advantage in trade has become. Moreover, despite the endless parade of American officials to Beijing pleading for more currency appreciation, the Chinese apparently think they’ve already done enough. Porter himself quotes China’s premier Wen Jiabao to the effect that the dollar-renminbi now may “have reached equilibrium level.”

Thus, there is little evidence that either the market or the Chinese leadership intend to rescue the U.S. from its trade quagmire.

Unfortunately, neither is there evidence that American leaders—from either party—intend to take responsibility for doing it themselves. Not only do they have no strategy to deal with the trade deficit, but President Obama and congressional Republicans are busily preparing for yet another of the so-called free trade agreements—this one to a group of countries around the Pacific rim—that have allowed our multinationals to off-shore production for the American consumer for over three-and-a-half decades.

But the market will not be denied; eventually we will balance our trading account. So, in the absence of a proactive policy, GE will be the model—the relentless lowering of American wages and living standards until the gap with workers in China and Mexico is closed.

Andrew Biggs is at it again

Comic Demetri Martin has this advice: “Only people in glass houses should throw stones, provided they are trapped in the house with a stone.”

Feeling trapped might explain the American Enterprise Institute’s Andrew Biggs’ penchant for stone throwing, such as accusing public pensions of projecting rosy rates of return (in Biggs’ view, anything higher than Treasury bond yields) despite the fact that he once hyped Social Security private accounts with promises of riches galore.

His latest: charging the National Institute of Retirement Security with advocating stone throwing—or at least window breaking—in order to stimulate the economy (apologies for the colliding metaphors).

Specifically, Biggs says NIRS ignores the cost to taxpayers in its research on the economic impact of public pensions, likening this to advocating window breaking as a way to create jobs for glaziers. Aside from the fact that the report in question repeatedly cites taxpayer costs, author Ilana Boivie is straightforward about the fact that her study measures the gross (not net) economic impact, as even Biggs eventually acknowledges. Thus, the economic stimulus from pensions can be compared to other forms of saving or spending without being limited to a specific counter-factual.

Biggs seems to think the relevant comparison should be with cutting public pension benefits and refunding the cost to taxpayers, as if they could be cut without damaging employee recruitment or retention—and, by extension, public services. He also cites another potential counter-factual: What would happen to the economy if state and local governments switched from traditional defined benefit pensions to 401(k)-style defined contribution plans? In theory, contributions to retirement plans should increase to make up for these plans’ inefficiency due to high fees and a lack of risk pooling. In practice, 401(k) contributions tend to be grossly inadequate, since anxiety, it appears, is not a good motivator. So such a switch would more likely lead to a decline in saving, an increase in consumption spending, and a short-run boost to our economy, albeit for all the wrong reasons (in other words, it would be rather like breaking windows to boost the economy). In the long run, you’d have to factor in an upward redistribution of wealth to high-income households who benefit the most from these accounts and whose higher saving rate would be a drag on our demand-constrained economy.

You can see how it gets complicated. Not for Biggs, though. For him, increasing savings in private accounts would lead to an earthly paradise, making Americans “not only richer, but also happier, healthier, more familial, smarter, and more active citizens.”

He said this back in the day when he was promoting President George W. Bush’s plan to partially privatize Social Security. Of course, Biggs took into account the cost in the form of reduced guaranteed benefits…

Actually, he didn’t. Which gets back to Biggs’ habit of accusing others of sins he’s committed. Maybe he feels trapped by Dean Baker in a crystal palace of Social Security privatization, and this is the only way he can think of getting out?

Price of a diploma: Class of 2012 faces tough job market, rising costs, and increasing debt

There was a great article in Monday’s Wall Street Journal that discussed the tough job market the Class of 2012 is facing. Many of these new graduates will be competing with the graduating classes of 2011 and 2010 just to get on the bottom rungs of the career ladder. While it’s well-documented that graduating into a depressed labor market lowers lifetime earnings potential on average, today’s young graduates have additional hurdles to worry about: rising higher education costs and crippling student debt.

At EPI, we, with economist Heidi Shierholz, recently released an analysis of the labor market for recent high school and college graduates. The results are predictably grim, with unemployment rates for both sets of graduates spiking at the beginning of the Great Recession and falling very slowly in the recovery.

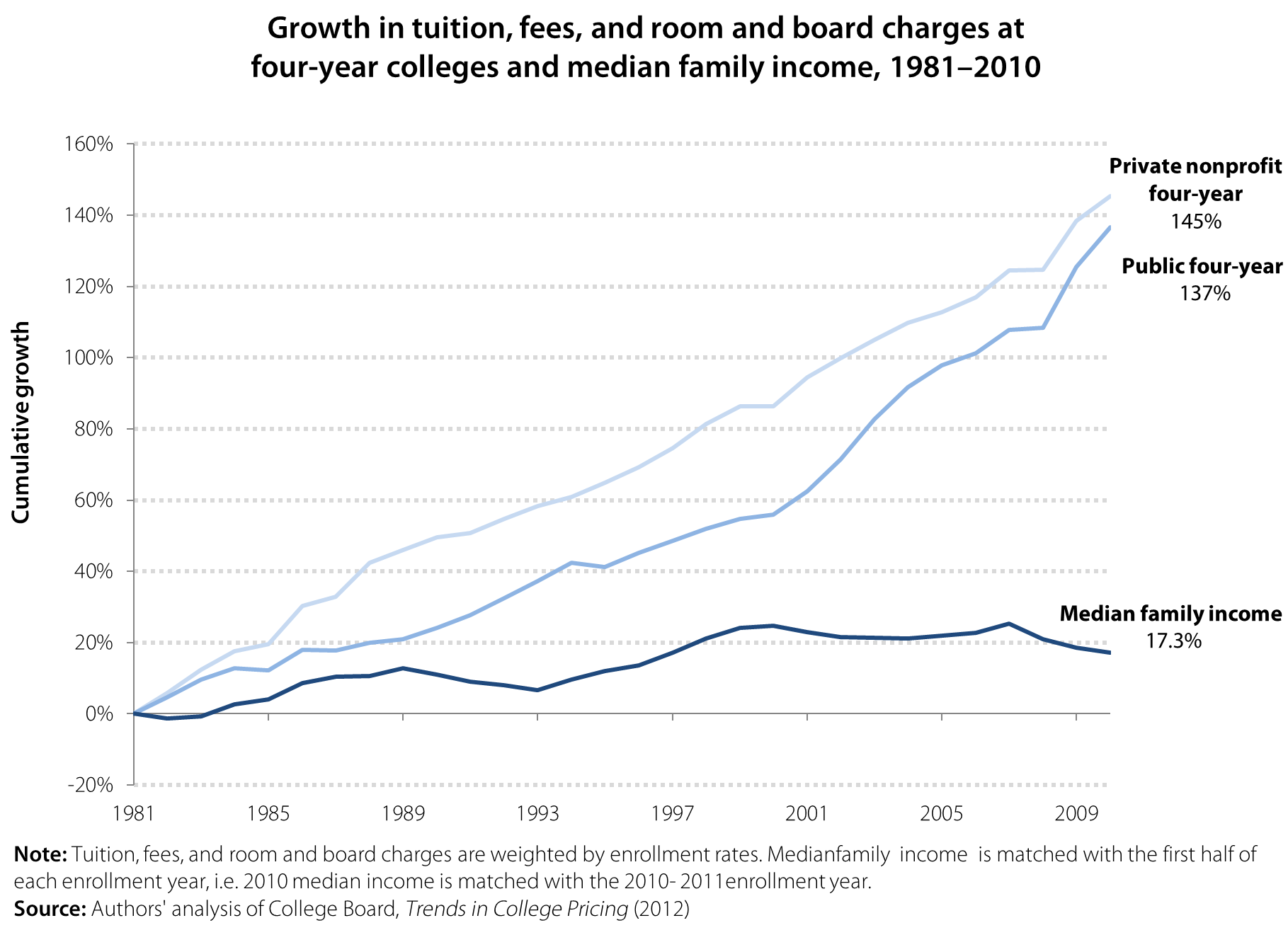

The report also highlighted the rising cost of obtaining a college degree. The figure below shows that the cost of higher education has been rising faster than family incomes for decades, making it harder for families to pay for college. From the 1981–82 enrollment year to the 2010–11 enrollment year, the cost of a four-year education increased 145 percent for private school and 137 percent for public school. Median family income only increased 17.3 percent from 1981–2010, far below the increases in the cost of education, leaving families and students unable to pay for most colleges and universities in full.

Unsurprisingly, a large majority of students and recent graduates take on debt to pay for college. Two-thirds of recent college graduates have student loans, and trends indicate that the number of student loans is increasing. Between 1993 and 2008, average student debt for graduating seniors increased 68 percent, from $14,410 to $24,238. Average debt for graduating seniors at public universities was $21,105 in 2008, and average debt for graduating seniors at private non-profit universities was $28,888 (authors’ analysis of Project on Student Debt 2010). In taking on these loans, students are taking a risk and hoping that they will be able to quickly secure work to begin paying them off after graduation. In recent years, and through no fault of their own, a growing number of graduates have been on the wrong side of this risk, with harsh consequences. Although most student loans have a grace period of six months before repayment begins, recent graduates who do not find a stable source of income may be forced to postpone payment though deferment or forbearance, miss a payment, or worst of all, default altogether on their loans. Deferment and forbearance are short-term fixes, however, and ultimately increase the amount borrowers owe once each period ends. Missed payments and default can ruin young workers’ credit scores and set them back years when it comes to saving for a house or a car.

Even worse, these young workers do not have a strong safety net on which to rely during the volatile job-seeking process.

Young workers are often ineligible for unemployment insurance, for example, because they must first meet state wage and work minimums during an established reference period—a reference period during which they are often in school and not working. Many new graduates are likely turn to their families for assistance. In 2011, 54.6 percent of 18- to 24-year-olds were living with their parents, an increase of 3.4 percentage points since 2007. This option is not, obviously, available to all young graduates. In short, young workers are being squeezed from multiple angles and their plight constitutes yet another instance of how damaging our tolerance of an underperforming economy truly is.

Additional findings and more analysis on the labor market facing young high school and college graduates can be found in our report, The Class of 2012: Labor market for young graduates remains grim.

Depressing graph of the day: The long-term unemployed

The Pew Fiscal Analysis Initiative has released an addendum to its 2010 report A Year or More: The High Cost of Long-Term Unemployment, and the update isn’t pretty. Using data from the Bureau of Labor Statistics’ Current Population Survey, Pew’s addendum finds that 29.5 percent of unemployed Americans in the first quarter of 2012 have been jobless for a year or more. That means 3.9 million working-age Americans haven’t been able to find a job in 12-plus months.

In 2008, during the first quarter of the Great Recession, 9.5 percent of the unemployed had been jobless for at least a year. While this percentage of the long-term unemployed peaked at 31.8 percent in the third quarter of 2011, it’s still very high and remains more than three times greater than at this point four years ago. Note also that BLS defines long-term unemployment as someone who has been unemployed for more than half a year (27 weeks or more). By this measure, 41.3 percent of the jobless still qualify as long-term unemployed.

Some other findings from the Pew analysis:

- Age: Older workers are less likely to lose their jobs, but much more likely to be jobless for a year or more once they do (see Figure 3 in the addendum).

- Education: Workers with higher levels of education are less likely to lose their jobs, but they’re no better off once they do as long-term joblessness is fairly even across all education levels (see Figure 5).

- Industry: No industry or occupation has gone unscathed due to long-term unemployment (see Table 3).

Continued high levels of long-term unemployment have a damaging impact on the economic situations of both individuals and families, and more broadly, on the economy as a whole. As EPI has documented before, the outcome of such long-term joblessness is “scarring,” which carries severe and long-lasting consequences for our economy and society.

What we should talk about when we talk about Social Security

Love?

That’s the original word in the Raymond Carver short story collection paraphrased in this blog title, and in a perfect world, that’s all we’d need to say: Americans love Social Security because it takes care of the people they love.

But this is Washington, so that won’t cut it. Fortunately, our friends at Social Security Works have hired some smart people to think about why we’re losing the messaging war on Social Security, despite the program’s popularity and the fact that transparently partisan attacks (“Ponzi scheme”) haven’t found much traction.

What has gotten traction: a decades-long and lavishly-funded campaign to convince younger workers that Social Security won’t be there when they retire, which opens the door to all sorts of shenanigans. Many Washington insiders, from across the political spectrum, have bought into the idea that Social Security is on an unsustainable course, and a willingness to slash benefits has become a “badge of fiscal seriousness” inside the Beltway, as Paul Krugman has noted.

Once the myth that Social Security is in trouble has taken root, attempts to dispel it with facts can inadvertently reinforce it, because the issue becomes technical and confusing, and people tend to assume that if you’re arguing about a problem, then it must exist. (If-there’s-smoke-there-must-be-fire logic isn’t always wrong, by the way: The Federal Reserve’s repeated attempts to pooh-pooh the existence of housing and stock bubbles should have put people on alert long before the bubbles burst in 2007.)

The good news, as John Neffinger of KNP Communications explained at a recent meeting of Social Security advocates, is that positive messages about Social Security can stick if presented the right way and in the right order.

Here are some DOs and DON’Ts, according to Neffinger:

- DON’T fight on their terrain (“Social Security isn’t going broke”).

- DO focus on the fact that Social Security is the only secure pillar of our retirement system (“If the middle class can’t count on Social Security in their retirement years, what can it count on? Not home equity, or 401(k)s or IRAs…”). In this vein, push for strengthening the program, as Michael Hiltzik does in this recent Los Angeles Times article.

- DO explain attacks on Social Security as attempts to dismantle the system for private gain (“Wall Street stands to make billions from managing more private accounts”) or political ideology (“They take their marching orders from someone who wants to shrink government to the size that they can drown it in a bathtub”).

- DO address the solvency issue by showing that simple adjustments can maintain the system (“Scrap the cap, so everyone pays the same percentage of their income in payroll tax”).

- But DON’T lead with the last two points, or you risk sounding partisan and reinforcing the myth that Social Security is in crisis.

The beauty of this framework, at least in theory, is that it avoids talking points that can backfire, like accusing Congress of raiding Social Security. It also sidesteps confusing topics like the trust fund that are hard to explain in a sound bite. Read more

Addressing price parity concerns

The Atlantic‘s Derek Thompson had a nice write-up on the Future of Work paper that EPI released April 27. In his article, Thompson included a bar chart illustrating “where poverty lives,” showing the 10 states with the highest share of workers making less than poverty-level wages, as defined in the paper. Thompson’s chart also included the share of workers in these states making between 100-200 percent of poverty wages. His bar chart clearly demonstrated that in these states, between 70-80 percent of workers earned less than twice the official poverty threshold for a family of four in 2010, or around $46,000 annually. (As a side note on poverty measures, my colleague David Cooper did a nice blog post explaining how a more dynamic method of assessing poverty—the Census Bureau’s Research Supplemental Poverty Measure—both defines millions more Americans as being impoverished and shows a far greater proportion of people living at very modest means, when compared with the official measure.)

A few commenters on Thompson’s piece brought up a good point in regards to comparing wages by geographic location (in this case by state). Some were quick to point out that the 10 states with the highest shares of workers earning at or below poverty level wages were “red states,” while others pointed out that the analysis did not take into account purchasing power parity—that is, the fact that the purchasing power of a dollar may be different in Manhattan than it is in rural Kansas. Their point was simply that a worker earning $10 per hour in Kansas may actually fare better than someone earning far more who has to pay rent and buy goods and services in a traditionally pricey market such as New York City, San Francisco, or Washington, D.C.

The table below adjusts for this by inflating the poverty wage used in the paper—$10.73 per hour in 2010—by a regional price parity index calculated by the Bureau of Economic Analysis (I actually averaged their measures for 2005 and 2006 that come from a BEA Research Spotlight titled Regional Price Parities: Comparing Price Level Differences Across Geographic Areas). The table shows the adjusted poverty wage level by state and the share of people in each state earning at or below that level. While Hawaii is somewhat of an anomaly due to its geographical separation from the lower 48 states by 2,500 miles of ocean, the next three states with the highest shares of workers earning less than the adjusted poverty wage are New York, California, and New Jersey; all states that contain very large metropolitan areas (as well as more rural areas). An even more accurate breakdown of regional purchase parity would show differences between rural and urban areas, as well as safety net services available for low-wage workers by region, but that is beyond the scope of this blog post.

Adjusted share of workers earning poverty wages by state, 2010

| State | 0-100% poverty wages | Adjusted poverty wage |

|---|---|---|

| Hawaii | 40.6% | $14.04 |

| New York | 38.4% | $14.10 |

| California | 37.8% | $13.75 |

| New Jersey | 31.8% | $13.42 |

| Rhode Island | 31.6% | $12.24 |

| Nevada | 27.7% | $10.74 |

| Connecticut | 27.5% | $13.20 |

| Illinois | 26.3% | $10.81 |

| Michigan | 26.3% | $10.04 |

| Massachusetts | 26.2% | $12.99 |

| New Hampshire | 26.2% | $12.21 |

| Florida | 25.2% | $10.59 |

| Oregon | 25.0% | $10.29 |

| Arizona | 24.5% | $10.22 |

| Texas | 24.5% | $9.81 |

| Virginia | 23.8% | $10.96 |

| Delaware | 22.5% | $11.44 |

| Vermont | 22.2% | $10.80 |

| Maryland | 21.7% | $11.38 |

| Pennsylvania | 21.4% | $10.06 |

| Washington | 21.2% | $11.08 |

| Tennessee | 20.6% | $9.00 |

| Colorado | 20.6% | $10.46 |

| Alaska | 19.8% | $11.24 |

| Ohio | 19.5% | $9.42 |

| Indiana | 19.0% | $9.16 |

| Minnesota | 18.8% | $10.20 |

| Georgia | 18.3% | $9.48 |

| North Carolina | 18.2% | $9.41 |

| Louisiana | 18.2% | $8.76 |

| Maine | 18.0% | $9.92 |

| Utah | 17.6% | $9.37 |

| Mississippi | 17.5% | $8.42 |

| Wisconsin | 17.4% | $9.82 |

| Kentucky | 17.4% | $8.69 |

| Alabama | 17.0% | $8.50 |

| Oklahoma | 16.5% | $8.67 |

| Idaho | 16.5% | $8.87 |

| New Mexico | 16.4% | $8.91 |

| Nebraska | 15.6% | $9.39 |

| Missouri | 15.5% | $8.79 |

| South Carolina | 15.3% | $8.92 |

| Kansas | 14.8% | $8.97 |

| Wyoming | 14.6% | $9.31 |

| Montana | 14.4% | $8.87 |

| Arkansas | 14.2% | $8.34 |

| Iowa | 14.0% | $8.95 |

| District of Columbia | 13.1% | $10.61 |

| South Dakota | 12.4% | $8.67 |

| North Dakota | 10.7% | $8.28 |

| West Virginia | 6.9% | $7.33 |

Source: Author's analysis of Current Population Survey Outgoing Rotation Group microdata, Bureau of Economic Analysis data

Note: Data were calculated using poverty data for a four-person household

The bottom line in this state-by-state comparison is not whether the states that show a high share of low-wage workers are red or blue; it’s rather to illustrate the often-enormous shares of people earning very low wages. As this National Low Income Housing Coalition report points out, for full-time individuals earning what they call the “renter wage,” a two-bedroom unit is unaffordable in nearly every state. The report also has some really interesting data, broken down by state, metropolitan area, and county, that shows how many full-time minimum wage jobs a household would need to hold to afford at two-bedroom fair market rent (FMR) unit. The data shows that whether or not low-wage workers are living and working in New York County (3.8 full-time minimum wage jobs to afford a FMR two-bedroom) or Wichita, Kan. (1.7 full-time minimum wage jobs to afford a FMR two-bedroom), their wages are likely not sufficiently covering their expenses.

How Romney can show support for working women

From 2007 to 2011, 70 percent of the jobs lost in the state and local public sector were jobs held by women. This amounts to roughly 765,000 jobs. Since 2011, we have continued to see public-sector job losses, and, unfortunately, it is a good bet that the declines will continue.

The Obama administration has provided aid to state and local government in a variety of ways. For example, the American Recovery and Reinvestment Act (ARRA) injected more than $180 billion into state and local government. This aid has helped preserve significant numbers of jobs held by women. Women can be found in all types of public-sector jobs, but they are overrepresented in the public sector, in part, because they are more likely to be teachers. So far, $90 billion from ARRA has gone to education.

Out of a desire to appeal to women voters, Mitt Romney’s campaign has been making misleading statements about President Obama’s record. One way for Romney to show legitimate support for working women is to promise that, if elected, he will provide ample federal aid to state and local government. Unfortunately, Romney’s current commitment to shrink government means that he will put even more women out of work.

Underemployment isn’t a ‘myth’ for recent college grads

As readers of this blog are well aware, the labor market remains in terrible shape in the aftermath of the worst downturn since the Great Depression; this is evident in a wide array of economic data and is not disputed in the economics profession. Graduating into said labor market (in which the level of voluntary quits remains weak) with little to no work experience or wage history isn’t an enviable position, as my colleagues Heidi Shierholz, Natalie Sabadish, and Hilary Wething detail in their new paper The Class of 2012: Labor market for young graduates remains grim. Which is why I was flabbergasted by Abigail Johnson’s and Tammy NiCastro’s recent Forbes.com blog post Get Over It: The Truth About College Grad ‘Underemployment.’ Their title is plenty revealing, but here’s the gist of their argument:

“In recent weeks, there have been a slew of articles that reported how difficult things will be for this year’s college graduates because they can expect to be unemployed or “underemployed” … It’s not clear where the concept of being “underemployed” came from. But it’s damaging and counterproductive.”

The Bureau of Labor Statistics’ (BLS) U-6 Alternative Measure of Labor Underutilization—often referred to as the underemployment rate—is not a myth. It’s defined as such: “Unemployed, plus all persons marginally attached to the labor force, plus total employed part time for economic reasons, as a percent of the civilian labor force plus all persons marginally attached to the labor force.” EPI’s State of Working America website even tracks it on a monthly basis across educational attainment, gender, and race and ethnicity. Here’s what it looks like by educational attainment:

Not a pretty picture. Since the onset of the recession more than four years ago, underemployment has roughly doubled across all educational attainment levels (a clear indicator that the economy suffers from a sheer lack of aggregate demand—the economy is running $853 billion below potential output—rather than “structural” employment problems). With so much excess slack in the labor market, employers have all the bargaining power, hence anemic wage growth and the “employed part time for economic reasons” (i.e., involuntarily) part of the underemployment rate. Horatio Alger can’t set his hours worked—there simply aren’t enough hours of work being demanded in the depressed economy.

And as Shierholz, Sabadish, and Wething detail, it looks much, much worse for recent high school and college graduates entering the labor market. Over the last year, the unemployment rate averaged 31.1 percent for recent high school graduates and 9.4 percent for recent college graduates. The underemployment rates averaged 54 percent and 19.1 percent, respectively. High unemployment and underemployment, and accompanying depressed earnings early in career, will result in long-term economic scarring, particularly diminishing lifetime earnings. Beyond underemployment as measured by the BLS, skills/education-based underemployment (so called “cyclical-downgrading”) will contribute to lifetime earnings scarring; as my colleagues note, “entering the labor market in a severe downturn can lead to reduced earnings, greater earnings instability, and more spells of unemployment over the next 10 to 15 years.”

In suggesting that college grads should be grateful to take a job at Starbucks because they aren’t “entitled” to anything more, the authors blithely overlook that recent college graduates working at Starbucks part-time for economic reasons are likely displacing hours from someone with lower educational attainment. This is in no way an indictment of any such recent graduates. This is to say what’s truly damaging and counterproductive is economically illiterate “thought pieces” breeding complacency about the state of the labor market and the policy response to the Great Recession. Neither a college degree nor government can guarantee recent grads “good jobs” or full-time employment, but between the Great Depression and the Great Recession, government prioritized stabilizing the economy and targeting full employment—to the benefit of workers of all educational attainment levels. Underemployment of recent graduates is not a myth being cooked up to breed entitlement as the authors imply; it’s a reality and tragic failure of policymakers to address the jobs crisis.

Video: Paul Krugman discusses his new book

Yesterday, Nobel-winning economist and New York Times columnist Paul Krugman spoke at EPI about his new book, End this Depression Now! A key point of the book and his speech is that there’s a common and very wrong belief that the economy is like a morality play: Lots of people made irresponsible decisions in the run-up to the economic collapse, and, like a hangover, they must now suffer the consequences of their actions.

As Krugman points out, the majority of the people who have been hurt by this crisis do not deserve the blame. Over eight million people lost their jobs and, with an unemployment rate of more than 8 percent for more than three years now, many of those same workers, along with new entrants to the labor market, have been unable to find jobs. Their jobs disappeared through no fault of their own, and the pace of their return is nowhere near sufficient to get everyone back to work anytime soon.

More importantly, Krugman points out that, unlike a hangover that needs to be waited out, we could easily fix the economy now and put these millions of people back to work. First, we should halt the fiscal austerity efforts that recently doomed the British economy. Second, we should embark on aggressive fiscal expansion to boost consumer and business spending, stimulating demand for goods and services and creating jobs. As Krugman notes, this is basic Econ 101. All we need is the political will.

What world of fiscal policy is Michael Gerson inhabiting?

Michael Gerson’s recent op-ed in the Washington Post hailed Rep. Paul Ryan (R-Wis) as the champion of “Reform Conservatism,” largely out of admiration for Ryan’s budget. In doing so, Gerson displayed a remarkable misunderstanding of both Ryan’s budget and fiscal policy at large. This adulation of Ryan—totally divorced from the policy specifics supposedly legitimizing Ryan—is exactly the inside-the-Beltway nonsense driving Jonathan Chait apoplectic (see his New York Magazine piece on Ryan).

Gerson’s major offenses are twofold, but he manages to hit both in the same sentence: “[The Ryan budget] deals seriously with the fiscal crisis — which, driven by demographics and cost increases, is a health entitlement crisis.” Let’s take these one at a time.

First off, Ryan’s budget is not serious—it’s gimmicky above and beyond the point of credibility. The Ryan budget proposes $4.5 trillion in tax cuts financed with a giant asterisk that wouldn’t come close to raising that much revenue and then simply “assumes” its desired revenue level (and forces the Congressional Budget Office to do the same in their long-term analysis). Under more reasonable assumptions about feasible “base-broadening,” the Ryan budget would push public debt north of 74 percent of GDP by the end of the decade, and roughly 84 percent of GDP if the tax cuts were entirely deficit-financed. (Ryan claims to hit 62 percent—we’re not talking rounding errors.)

Secondly, our long-run fiscal challenges overwhelmingly stem from the dual problems of escalating national health care expenditure and an addiction to tax cuts. Demographic change and health care inflation will certainly drive up federal health expenditure, but these trends are a broader national economic challenge with ramifications for the federal budget, not vice versa. And demographic change can’t be reformed away—it compels more revenue, not less.

So how seriously does Ryan deal with these underlying economic challenges? Not at all: He offers an accounting solution for the federal government—turning Medicare into a voucher system and slashing Medicaid—that would exacerbate national health expenditure. Economists Dean Baker and David Rosnick estimated that Ryan’s FY2012 budget would increase national health expenditure by $30 trillion over 75 years if seniors purchased Medicare-equivalent plans on the private market; that’s because Medicare is 11 percent cheaper than an equivalent private plan and is projected to be 28 percent cheaper by 2022. (The more likely result is more health expenditure as well as worse coverage and care.) Forsaking the economies of scale and purchasing power of Medicare would shift costs from the federal balance sheet to businesses, households, and state governments, while worsening the economic challenges at hand. Incidentally, the Affordable Care Act took the opposite approach—using government’s market share to lower costs—and it’s showing early signs of working; as the New York Times reported last week, national health spending has slowed markedly over the last few years. While much more can be done on this front, the latter is a serious approach to an economic problem, unlike slashing health care for the impoverished and disabled as the Ryan budget proposes.

Furthermore, long anticipated demographic change is a reality that compels looking at both sides of the budget ledger and viewing historical benchmarks as poor guides for setting policy. Gerson even acknowledges that revenue must realistically rise above a post-war historical average of around 18 percent of GDP (versus 17.8 percent under current policy and 18.3 percent magically assumed in the Ryan budget, over the next decade); but he then turns a blind eye to the reality that, short of unspecified offsets, the Ryan budget would drop revenue to 15.5 percent of GDP over the next decade according to the Tax Policy Center. Deficit-financed tax cuts also increase spending on debt service, which—like Gerson argues of health care—threatens to crowd out other government functions (under current policies, net interest spending—swollen by deficit-financed tax cuts—will exceed nondefense discretionary spending by the end of the decade). Gutting health care spending to partially finance massive, regressive tax cuts in no way equates to “addressing the fiscal crisis,” as Gerson adamantly claims Ryan is doing.

Gerson wants to believe the Republican Party cares about deficits, but their diametrically opposed focus is reducing taxes—overwhelmingly for those at the top of the income distribution. Ryan’s budget would indeed deeply cut health care spending, but it is neither focused on deficits nor serious; it’s about doubling-down on the Bush-era tax cuts. And the only serious things about the Bush-era tax cuts were the hole they blew in the federal budget and their dismal economic legacy.

Racial inequality and the black homicide rate

I had the privilege of attending the W.K. Kellogg Foundation’s America Healing Conference last week. The America Healing initiative promotes racial healing to address racial inequity, and, in doing so, works “to ensure that all children in America have an equitable and promising future.”

At the conference, the honorable Mitchell J. Landrieu, the mayor of New Orleans, gave a moving, passionate, and brave speech about homicide in black communities. He challenged us to consider whether we devalued black lives by not paying sufficient attention to the more common forms of homicide in black communities, and instead reserved our activism for homicides that could be conceived of as involving racism.

Landrieu made an important point, but I think he also missed a number of other significant points. The black homicide victimization rate is six times the white rate, so this is clearly a worthy issue to address. But, it is important to note that the black homicide victimization rate was cut in half from 1991 to 1999. It declined 49 percent while the white rate declined 39 percent. Too often we assume that things are always getting worse. It is beneficial to acknowledge this dramatic positive change, while also acknowledging that there is much more to be done. Since the 1990s, however, the black and white homicide rates have basically been flat.

Landrieu failed to acknowledge that much of the work done by the participants of the conference, if successful, is likely to reduce homicide rates. Homicide rates are driven by a very complex mix of psychological and sociological factors that are not yet completely understood by criminologists. Probably the majority of the conference attendees work in areas that have the potential to reduce homicide rates.

Some of the participants work to improve educational outcomes for blacks. Research suggests that increases in the educational attainment, particularly of males, will reduce homicide rates. (Males are more likely to commit homicide, and it is likely that their social and economic circumstances may play a big role in homicide rates.)

Healthy children do better in school and also have lower rates of criminal offending. All aspects of health, especially in the early years, probably matter, but we should be especially concerned about the very high rates of black children’s exposure to lead. There are strong links of lead exposure to violent crime. Thus, the participants who are concerned with reducing racial disparities in children’s health can also be seen as working to reduce homicide rates.

Concentrated economic disadvantage, poverty, and unemployment have all been found to be predictors of homicide rates. Participants working to improve the economic conditions of black communities can also be said to be working to reduce homicide rates.

A number of other aspects of racial inequity that the attendees to the conference work on are also likely to be drivers of higher black homicide rates. Thus, it is not accurate to say that the participants of the conference were not regularly working to address homicide.

Finally, while the mechanisms to reduce homicide rates are not yet completely understood, the response to bad policing, bad laws, and racial-biased individuals is clearer. In part, it may be for this reason that there can be highly visible mobilizations around these issues. A relatively quick mobilization might change bad police practices, undo a bad law, or change the behavior of a specific racially-biased person. Undoing racial inequity in all of the factors found to drive homicide rates—health, education, economics, and more—will require a longer and deeper struggle. Read more

It’s executives and the finance sector causing surging 1% income growth!

That the incomes of the top 1 percent have fared fabulously is well known, and deservedly so. But it was not until the analysis of tax returns by Jon Bakija, Adam Cole, and Bradley Heim that it could be documented that the doubling of the income share of the top 1 percent could be directly traced to executive compensation and finance-sector compensation trends. The new EPI paper, CEO pay and the top 1%: How executive compensation and financial-sector pay have fueled income inequality, which previews some of the findings from the forthcoming State of Working America, does exactly that.

Between 1979 and 2005 (the latest data available with these breakdowns), the share of total income held by the top 1.0 percent more than doubled, from 9.7 percent to 21.0 percent, with most of the increase occurring since 1993. The top 0.1 percent led the way by more than tripling its income share, from 3.3 percent to 10.3 percent. This 7.0 percentage-point gain in income share for the top 0.1 percent accounted for more than 60 percent of the overall 11.2 percentage-point rise in the income share of the entire top 1.0 percent.

The increases in income at the top were largely driven by households headed by someone who was either an executive or in the financial sector as an executive or other worker. Households headed by a non-finance executive were associated with 44 percent of the growth of the top 0.1 percent’s income share and 36 percent in the growth among the top 1.0 percent. Those in the financial sector were associated with nearly a fourth (23 percent) of the expansion of the income shares of both the top 1.0 and top 0.1 percent. Together, finance and executives accounted for 58 percent of the expansion of income for the top 1.0 percent of households and an even greater two-thirds share (67 percent) of the income growth of the top 0.1 percent of households.

The paper also presents new analysis of CEO compensation based on our tabulations of Compustat data. From 1978–2011, CEO compensation grew more than 725 percent, substantially more than the stock market and remarkably more than the annual compensation of a typical private-sector worker, which grew a meager 5.7 percent over this time period.

One way to illustrate the increased divergence between CEO pay and a typical worker’s pay over time is to examine the ratio of CEO compensation to that of a typical worker, the CEO-to-worker compensation ratio, as shown in the figure. This ratio measures the distance between the compensation of CEOs in the 350 largest firms and the workers in the key industry of the firms of the particular CEOs.

CEO-to-worker compensation ratio, with options granted and options realized,1965–2011

Note: "Options granted" compensation series includes salary, bonus, restricted stock grants, options granted, and long-term incentive payouts for CEOs at the top 350 firms ranked by sales. "Options exercised" compensation series includes salary, bonus, restricted stock grants, options exercised, and long-term incentive payouts for CEOs at the top 350 firms ranked by sales.

Sources: Authors' analysis of data from Compustat ExecuComp database, Bureau of Labor Statistics Current Employment Statistics program, and Bureau of Economic Analysis National Income and Product Accounts Tables

Though lower than in other years in the last decade, the CEO-to-worker compensation ratio in 2011 of 231.0 or 209.4 is far above the ratio in 1995 (122.6 or 136.8), 1989 (58.5 or 53.3), 1978 (29.0 or 26.5), and 1965 (20.1 or 18.3). This illustrates that CEOs have fared far better than the typical worker over the last several decades. It is also true that CEO compensation has grown far faster than the stock market or the productivity of the economy.Read more

Apple’s executive pay, profits, and cash balance show ability to assist its factory workers

Apple’s latest “blowout” quarterly report, as well as an examination of its executive pay levels, underscores how easy it would be for the company to improve the working conditions of the Foxconn workers in China assembling Apple products. As Ross Eisenbrey and I summarized recently: “Apple workers in China endure extraordinarily long hours (in violation of Chinese law and Apple’s code of conduct), meager pay, and coercive discipline.”

Apple could insist that Foxconn pay these workers more and treat them fairly, and could easily pay for any additional costs. (The workers in question are employed in factory lines dedicated only to producing Apple products.) To offset these costs, Apple could modestly raise the price of its products to be sure, but it could also readily offset these costs through some combination of tiny reductions in profits, small trims in its cash balance, or adjustments in its pay to executives.

- The total compensation of the investigated Chinese workers making Apple products amounts to just 3 percent of Apple’s profits. In its most recent quarter Apple’s after-tax profits equaled $11.6 billion. By comparison, over an overlapping three-month period, the total compensation of the 288,800 Foxconn workers making Apple products equaled an estimated $350 million – or 3.0 percent of its after-tax profits. (I calculated this figure based on the average monthly pay of all Foxconn factory employees, including supervisors, found by the Fair Labor Association; the number of workers are those working in the three factories investigated.) This finding parallels a finding in a recent blog by Ross: labor costs at Foxconn are a “miniscule part of the iPhone’s costs.”

- The total annualized compensation of the investigated Chinese workers making Apple products amounts to just 1 percent of Apple’s cash/securities surplus. At the end of the most recent quarter, Apple had $10.1 billion in cash and cash equivalents, $18.4 billion in short-term marketable securities, and $81.6 billion in long-term marketable securities, for a total balance of $110 billion. By comparison, the total annualized compensation of the 288,000 Foxconn workers making Apple products is about $1.4 billion – or 1.3 percent of Apple’s cash/securities surplus.

- In 2011 and 2012, the top nine members of Apple’s executive team had total compensation equal to about 90,000 Chinese factory workers making its products. My just-released analysis found that in 2011, Apple’s nine-person executive leadership team received total compensation of $441 million. This was equivalent to the compensation of 95,000 factory workers at Foxconn assembling Apple products (making an estimated $4,622 per year).

In 2012, the executive team is on track to receive compensation of at least $412 million. This conservative estimate is equivalent to the compensation of 89,000 of the Chinese factory workers making Apple products.

The Social Security trustees report—now what?

As I wrote in an earlier blog, Social Security’s projected shortfall is around 20 percent larger than last year, though still less than one percent of GDP over the 75-year projection period. This doesn’t change the basic story that costs are rising from around 5 to 6 percent of GDP before leveling off after the Baby Boomer retirement, with costs at the end of the period slightly lower as a share of GDP than in the peak Boomer retirement years.

Raising Social Security taxes on both employers and workers from 6.2 percent to around 7.6 percent would close the projected shortfall.1 But there are better ways to raise the necessary revenue. The fairest and simplest is eliminating the cap on taxable earnings, which is currently set at $110,100. Though people pay income and Medicare taxes on all earned income (and will soon pay Medicare tax on unearned income as well), earnings above $110,100 aren’t subject to Social Security tax. Scrapping the cap would close 71-87 percent of the shortfall, depending on whether or not you increase benefits for high earners to reflect their higher contributions. Other no-brainers include covering newly-hired public-sector workers who currently aren’t in Social Security (closing 6 percent of the shortfall) and subjecting Flexible Spending Accounts and other salary-reduction plans to Social Security taxes (closing 9 percent).

Another option that has more mixed support among Social Security advocates is gradually increasing the contribution rate to offset increases in life expectancy. This would increase taxes very slowly—by 0.01 percentage points per year, much more slowly than projected wage growth—and would close around 15 percent of the shortfall if the increase began in 2025, after the gradual increase in the normal retirement age from 65 to 67 had been fully implemented. The advantage of this option is that it might take the issue of life expectancy, a favorite of Social Security alarmists, off the table. The disadvantage is that everyone would pay more, even low-income workers and others who’ve seen little or no increase in life expectancy. It’s also worth noting that it doesn’t raise that much money, because, contrary to myth, rising life expectancy is a relatively small factor in the emergence of the projected shortfall. A much bigger factor is slow and unequal wage growth, which has increased corporate profits and pushed a growing share of earnings above the cap, eroding Social Security’s tax base (see chart).

Source: Social Security Administration

Putting these together—scrapping the cap, covering public sector workers, taxing FSAs, and offsetting life expectancy through a gradual increase in the contribution rate—would be more than enough to close the projected shortfall. You can come up with your own plan by looking at the first column of figures in the table starting on p.8 here and dividing by 2.67 (the projected shortfall expressed as a share of payroll).

Thanks to blog reader “Susan” and my friend Liz, whose questions prompted this follow-up post.

Endnotes

1. The combined increase (1.4 percent multiplied by two, or 2.8 percent) is slightly more than the size of the actuarial deficit measured as a share of payroll (around 2.7 percent) because some compensation would likely shift to untaxed benefits. This measure also conservatively assumes the trust fund should have enough at the end of the period to pay for a year of benefits without additional contributions, even though Social Security is primarily a pay-as-you-go program. Strictly speaking, the unfunded obligation is closer to 2.5 percent of payroll according to the trustees report.

Glenn Kessler’s wrong call on Romney’s Buffett Rule chicanery

I thought I’d never say this, but I think my colleague Andrew Fieldhouse is being soft on Glenn Kessler, writer of the Washington Post‘s Fact Checker column. Long story short, Mitt Romney and the Republicans are criticizing the Buffett Rule for only raising $47 billion. Democrats say that score is bogus because it’s measured against a current law baseline in which the Bush tax cuts expire, and instead are using a $162 billion score that is measured against current policy (all the Bush tax cuts are assumed to continue). Kessler ends up defending the current law score and criticizing Democrats and other Buffett Rule supporters for using the current policy score.

Kessler’s wrong on both points. For conservatives to claim that the Buffett Rule only raises $47 billion over a decade is simply nonsense. The only groups that measure policy impacts with the assumption that the Bush-era tax cuts will expire are those that are legally required to do so: the Joint Committee on Taxation and the Congressional Budget Office. In contrast, Wisconsin Rep. Paul Ryan, President Obama, and even Romney, all use an adjusted current policy baseline that assumes the Bush tax cuts will be extended.

Second, Kessler, argues that progressives are wrong to use the $162 billion score against current policy because it overlaps with other tax policies they support, namely expiration of the upper-income Bush tax cuts. This is ridiculous, but complicated, so bear with me.

Let’s start with the very basic point that most policies have interaction effects with other policies. That’s why it’s important when creating a budget to consider the order in which you want to layer policies on top of a baseline. In other words, each policy is scored against a changing baseline in which all the previous policies have already been adopted. It doesn’t matter to your top-line deficit impact, of course, but the scoring of many policies depends on whether they are preceded by other policies with which they interact—particularly when it comes to tax policy.

But scores for individual policies outside of the context of a larger comprehensive package are always scored against the same baseline. Kessler is implying that the Democrats and Republicans should use different baselines reflective of their policy preferences. But this would undermine the entire purpose of a baseline, which is to make sure that everyone’s numbers are calculated using the same assumptions so that the differences reflect only the policy differences. In other words, Kessler is defending Romney and the GOP for using a baseline that they use in no other circumstance, and criticizing progressives for using a baseline that they—along with everyone else—use consistently.

Since Kessler is seemingly the closest thing our political system has to a court of law, let’s examine the legal holding he’s just created: Scores must be measured against a baseline that reflects your other policy proposals. This creates a number of problems. First off, not everyone that supports the Buffett Rule supports all the same policy proposals. Let’s say I’m a congressman who opposes letting any Bush tax cuts expire—am I allowed to use the $162 billion score? What if I’ve been vague on the subject of the Bush tax cuts but strongly support the Buffett Rule, what score would I use then without violating Kessler’s rule?

Second, as I mentioned earlier, the order of the policies matter. Kessler argues that the $162 billion overlaps with the $849 billion from the top two rates, so the $162 billion is wrong. But that assumes that Democrats intend to layer the Buffett Rule on top of the rate increase—if they do the Buffett Rule first, then the $162 billion score is accurate.

See how complicated this gets? Heck, I probably lost most of you once you read the word “baseline” in the third sentence. So let’s make it simple. Right now, pretty much everyone uses a current policy baseline. They may differ around the margins—for example, should the baseline assume tax provisions like the research and experimentation credit get extended?—but they’re mostly the same. Generally, when people are using scores that aren’t against this baseline, they’re intentionally being misleading. And rather than encouraging that behavior, Kessler should call it out. After all, isn’t that his job?

Understanding the wedge between productivity and median compensation growth

One of the key dynamics of our economy for more than 30 years has been the divergence between productivity growth and compensation (or wage) increases for the typical worker. This divergence between pay and productivity has been increasingly recognized as being at the heart of the growth of income inequality. I am proud that Jared Bernstein (yo, Jared!) and I were the first ones to call attention to this, which we did in the introduction to The State of Working America 1994/1995, which was published on Labor Day in 1994. At that time, we were responding to the oft-repeated claim that wage stagnation experienced by most workers was caused by the post-1973 productivity slowdown. Get productivity up and all would be OK, we were told. Bob Rubin told us reducing the deficit would help accomplish that. By plotting productivity and median wage growth together, we were able to demonstrate that even though productivity growth was indeed historically slow in the preceding two decades, the growth of the median wage had substantially lagged even this anemic productivity growth. As it turns out, productivity growth accelerated in 1996 and has remained higher than in the 1973-1995 period since. Interestingly, the gap between productivity and median hourly compensation growth has grown at its greatest rate over the 2000-11 period despite productivity growth that continued to outpace the 1973-95 rates.

Understanding the driving forces behind the productivity-median hourly compensation gap is the subject of a new paper, The wedges between productivity and median compensation growth, that previews a portion of the analysis in the forthcoming State of Working America. This research reflects the results in a more technical paper, Why Aren’t Workers Benefiting from Labour Productivity Growth in the United States, that I co-authored with Kar-Fai Gee, an economist at the Canadian Centre for the Study of Living Standards (CSLS). The paper is in the spring 2012 issue of the International Productivity Monitor(edited by Andrew Sharpe and published by CSLS, on whose board I am proud to serve).

During the 1973 to 2011 period, labor productivity rose 80.4 percent but real median hourly wage increased 4.0 percent, and the real median hourly compensation (including all wages and benefits) increased just 10.7 percent. These trends are shown in the table below. If the real median hourly compensation had grown at the same rate as labor productivity over the period, it would have been $32.61 in 2011 (2011 dollars), considerably more than the actual $20.01 (2011 dollars). Consequently, the conventional notion that increased productivity is the mechanism by which living standards increases are produced must be revised to this: Productivity growth establishes the potential for living standards improvements and economic policy must work to reconnect pay and productivity.

The objective of our new paper is to provide a comprehensive and consistent decomposition of the factors explaining the divergence between growth in real median compensation (note the paper focuses on median wages while I have simplified the analysis here to focus on median compensation) and labor productivity since 1973 in the United States, with particular attention to the post-2000 period. In particular, the paper identifies the relative importance of three wedges driving the median compensation-productivity gap: 1) rising compensation inequality, 2) declining share of labor compensation in the economy (the shift from labor to capital income), and 3) divergence of consumer and output prices.

The following table is based on this paper and will be in the new edition of State of Working America that will be released on Labor Day. This decomposition is of economy-wide productivity growth, real average hourly compensation growth of all workers (including the self-employed), and the median real hourly compensation of workers age 18-64. See the paper for technical details.

Reconciling growth in median hourly compensation and productivity growth, 1973-2011

| Sub-periods | |||||

|---|---|---|---|---|---|

| 1973-79 | 1979-95 | 1995-00 | 2000-11 | 1973-11 | |

| A. Basic trends (annual growth) | |||||

| Median hourly wage | -0.26 | -0.15 | 1.50 | 0.05 | 0.10 |

| Median hourly compensation | 0.56 | -0.17 | 1.13 | 0.35 | 0.27 |

| Average hourly compensation | 0.59 | 0.55 | 2.10 | 0.95 | 0.87 |

| Productivity | 1.08 | 1.29 | 2.33 | 1.88 | 1.56 |

| Productivity-median compensation gap | 0.52 | 1.46 | 1.21 | 1.53 | 1.30 |

| B. Explanatory factors (percentage point contribution) | |||||

| Inequality of compensation | 0.02 | 0.72 | 0.97 | 0.59 | 0.61 |

| Shifts in labor’s share | 0.03 | 0.23 | -0.40 | 0.69 | 0.25 |

| Divergence of consumer and output prices | 0.46 | 0.51 | 0.64 | 0.24 | 0.44 |

| Total | 0.52 | 1.46 | 1.22 | 1.52 | 1.29 |

| C. Relative contribution to gap (percent of gap) | |||||

| Inequality of compensation | 4.8% | 49.6% | 80.0% | 38.9% | 46.9% |

| Shifts in labor’s share | 5.5% | 15.4% | -32.5% | 45.3% | 19.0% |

| Divergence of consumer and output prices | 89.7% | 35.0% | 52.5% | 15.8% | 34.0% |

| Total | 100.0% | 100.0% | 100.0% | 100.0% | 100.0% |

Austerity in the UK — losing the argument and the economy

New data from the United Kingdom indicates that its economy has seen six consecutive months of economic contraction—the rule of thumb definition of recession.

The lesson here should be pretty plain: this is the utterly predictable (and predicted in real time) result of these policies.

Let’s be even more concrete: If the U.K. had just followed the fiscal stance of the United States over the past two years, they would not have re-entered recession. Adam Posen of the Bank of England recently estimated that the U.S. fiscal stance has contributed about 3 percent extra to overall GDP growth compared to a scenario where they had followed the U.K. stance. And this gap has actually widened in more recent years (and is projected to widen even further for 2012).

Posen’s estimate crucially includes the drag from state and local governments in the U.S., so it’s not like this overall fiscal stance in the U.S. over this time has been wildly expansionary. Just matching the U.S. fiscal support over this time period would have been a pretty modest goal.

But of course, this goal was rejected by the conservative government elected in mid-2010, and instead the U.K. has followed a plan based on austerity.

There is plenty to lament in policymaking responses to the crisis of the past four years, but the U.K. fiscal tightening may well be the single most avoidable own-goal over the period. Greece, for example, really can’t run expansionary fiscal policy right now (at least not without help from the core countries of the eurozone) without getting savaged by bond markets that will push up interest costs on debt.

The U.K., on the other hand, faces no such constraints. They print their own currency so they cannot be forced into default by bond markets, and there has been no upward pressure at all on their debt-servicing costs since the Great Recession began (see chart below). There is, in short, no actually-existing macroeconomic problem that austerity addresses. Instead, the swing towards it has been driven by ideology. And it has not turned out well.

Attempt to block labor election modernization goes down in flames

For most of the last year, Washington business lobbyists and various right-wing organizations have been engaged in an all-out war against the National Labor Relations Board, the agency that protects the right of employees to join a union if they want to. The NLRB has been excoriated for an enforcement action against Boeing, for requiring employers to post a notice letting employees know what their basic rights are under the law, and for trying to modernize its 65-year-old procedures for union representation elections. In addition, congressional Republicans have taken extraordinary steps to block President Obama from appointing a full five-member board to lead the agency and decide cases.

Yesterday, Republican senators failed in an effort to block the NLRB’s election modernization rule. The Senate defeated a resolution of disapproval 54-45, with all Democrats opposed and all but one brave Republican, Sen. Lisa Murkowski of Alaska, crossing party lines. The resolution would have repealed the new rule and prevented the NLRB from adopting a new one to replace it.

One of the ironies of these right-wing attacks on the NLRB’s attempt to streamline representation election procedures is that it belies conservatives’ supposed dislike of excessive bureaucracy and frivolous litigation. Typically, business leaders and anti-government activists charge that government processes are plagued with unnecessary delays, for example in FDA approval of new drugs or medical devices. When it comes to rushing a product to market that might cause disabling injuries or even death, conservative critics usually side with speed over lengthy review.

Likewise, when the issue is the prevention of illegal immigration and the preservation of jobs for American citizens, leading businesses and trade organizations call for limited review and speedier determinations. Recently, for example, 40 multinational corporations wrote President Obama to complain that the State Department takes too long to issue visas to companies that want to bring foreign workers to the United States. The companies object to having government officials ask for evidence about the need for particular foreign computer techs, even though the Inspector General has found widespread fraud and abuse in visa applications. And nothing is more common than to hear officials of the Chamber of Commerce complain about frivolous litigation and laws that enrich attorneys–“full employment for attorneys!”–when the purpose of a law is to allow average citizens to sue after their health or safety has been jeopardized by corporate misbehavior.

So here, in the case of a regulation designed to reduce the opportunities for lawyers to delay representation elections through frivolous litigation, the Chamber is showing its real agenda. Efficiency no longer matters; the more time bureaucrats spend reviewing legal arguments that add nothing to a decision, the better.

Here’s a recent example involving T-Mobile: A union petitioned to represent a unit of 14 technicians in Connecticut. T-Mobile argued that five engineers should have been added to the unit. The law is clear that an employer cannot require that professional employees be added to a unit of non-professional employees, and engineers are regularly found to be professional employees. T-Mobile claimed that these engineers were different, forcing four days of hearings that wasted government resources. The new rules would have given the NLRB’s Hearing Officer the authority to require the employer to make an offer of proof as to how its engineers were “different” from the hundreds of cases in which engineers with college degrees were found to be professional employees, thus eliminating at least three of the four days of hearings and needless legal expenses.

Why are business lobbyists suddenly in favor of inefficiency and delay? Because delaying the date of the election gives the employer more time to harass and intimidate workers who otherwise might vote for a union.

Cornell researcher Kate Bronfenbrenner and her colleague Dorian Warren examined thousands of union representation cases and documented that employers engage in intense campaigns of abusive anti-union activity. They also found, as the NLRB put it, “a longer period between the filing of a petition and an election permits commission of more unfair labor practices with corresponding infringement upon employee free choice, while a shorter period leads to fewer unfair labor practices.”

Employers call the NLRB’s new election rules the “ambush election” rules because they remove various automatic appeals and such built-in delays as an arbitrary, automatic 25-day delay after the board issues an order for an election. This waiting period alone gave an extra three weeks to employers to hold captive-audience meetings (even to require employees to attend them outside of normal work hours), to subject employees to repeated one-on-one sessions with their supervisors, and to figure out which employees support the union and which do not.

The NLRB has realized that its old rules tilted the playing field toward anti-union employers and ultimately discouraged employees from exercising their right to choose without interference. An honest assessment and a modest amount of consistency would lead most observers to agree that the new rules are fair and sensible.

Sequestration will slow the recovery and job growth, period

Wednesday morning, the House Budget Committee is holding a hearing on “Replacing the Sequester”—the sequester being the automatic spending cuts established by the debt ceiling deal that are scheduled to kick in next year. It’s a safe bet that Republicans will scream about defense cuts being bad for jobs, but let’s just remember that ALL these cuts are bad for joblessness in the short-run. (Defense and nondefense spending are split roughly evenly on the sequester chopping block.) We’ve been asked many times “how much” of an impact sequestration would have on near-term employment and here are our best estimates:

These estimates reflect the impact of sequestration on total nonfarm payroll employment at the end of each fiscal year. They assume a fiscal multiplier of 1.4 for general government spending, which is Moody’s Analytics most recent public estimate of the government spending multiplier. While we use the same multiplier for all cuts, we’d guess that these likely slightly overstate the adverse economic impact resulting from defense spending cuts and understate job losses from domestic spending cuts. Budgetary programs for lower-income households in the discretionary budget—such as housing assistance and the special supplemental food program for women, infants, and children (WIC)—as well as infrastructure spending have particularly high multipliers. And to the extent that cuts to spending by the Department of Defense come from capital-intensive weapons acquisitions rather than reductions in personnel strength, the impact on employment would be milder. Regardless, any cuts in the near-term (unless they are ploughed into more spending somewhere else) are going to constitute a drag on the still-weak recovery.

Cutting government spending reduces aggregate demand and worsens joblessness while the economy is running well below-potential output. Conservatives’ selective Keynesianism—which pops up in their advocacy for defense spending and tax cuts, among other priorities—applies to the rest of government spending and the national income and product accounts, too.

Romney opposes the Buffett Rule? Why would that be?

Via the Washington Post‘s Fact Checker by Glenn Kessler, I see that Mitt Romney has adopted the specious “if it doesn’t fix the entire problem, it’s not worth doing,” objection to the Buffett Rule. Romney brushed aside the Buffett Rule because the $5 billion in revenue it would raise for fiscal 2013 relative to current law would only fund government for 11 hours.

First, it’s interesting to note that Romney and other individuals deriving the vast majority of their income from investments benefit tremendously from the lack of a Buffett Rule (which is more accurately characterized as the Romney Rule). The Paying a Fair Share Act—the Senate’s legislative version of the Buffett Rule that was filibustered last week (in spite of 72 percent public support)—would serve as a millionaires’ alternative minimum tax. When fully phased in, it would apply a minimum tax rate of 30 percent on adjusted gross income less charitable contributions (modified by the limitation on itemized deductions—temporarily repealed as part of the Bush tax cuts—if reinstated).

Mitt Romney’s 2010 tax return showed $21.6 million in adjusted gross income and $3.0 million in charitable giving. Had the Paying a Fair Share Act been fully in effect, Romney would have paid roughly $5.6 million in taxes for an effective tax rate of 25.8 percent, instead of the $3.0 million in taxes and 13.9 percent effective tax rate he paid for the year. That’s because the Buffett Rule is an indirect way to close the preferential rates on capital gains and dividends, as well as the carried interest loophole, that produced Romney’s rock-bottom tax rate.

Second, there’s an enormous amount of hypocrisy and insincerity going on here. As I recently noted, this ice-thin defense against popularly supported progressive tax policies is often used by the same people who spend inordinate amounts of time on defunding the National Endowment for the Arts, National Public Radio, Planned Parenthood, or other small budgetary line-items. Romney himself devotes eight full pages in his economic plan to his proposal to cut job training programs, which together represent one-half of 1 percent of the budget.

Furthermore, the Buffett Rule would raise far more than advertised. The cited estimate by the Joint Committee on Taxation (JCT) is based on current law, meaning that the Bush-era tax cuts are assumed to expire. Kessler notes that liberals have been pointing to a different JCT score based on current policy showing $162 billion in new revenue. But Kessler dismisses liberals’ use of the current policy score because other tax policies they support—namely expiration of the upper-income Bush tax cuts—would overlap with the $162 billion. True. But then you need to talk about the entire package and give the Democrats $47 billion in addition to the $849 billion that would be raised over the next decade by letting the upper-income tax cuts expire. Conversely, if we’re adjusting baselines for stated policy preferences, conservatives should be citing the $162 billion figure with regard to the Buffett Rule, reflecting continuation of all the Bush tax cuts.

Regardless of revenue scores, conservatives will again trot out the “if it doesn’t fix the entire problem, it’s not worth doing” line—but it’s ludicrous to argue that $896 billion is such a trivial sum that it’s not worth pursuing. That’s more than the first round of Budget Control Act spending cuts and caps conservatives’ extracted by hijacking the statutory debt ceiling. Accounting for net interest savings, the Buffett Rule coupled with expiration of the upper-income Bush tax cuts would save more than $1 trillion over a decade and reduce the debt-to-GDP ratio by 4.2 percentage points by 2022, relative to current budget policies. By all objective accounts, this is serious deficit reduction that would seriously improve fairness in the tax code. Strange, how purported concerns about the public debt fade into the ether when tax increases—or tax cuts—are on the table.

The 2012 Social Security trustees report in a nutshell

According to the trustees report released today, the 2012 Social Security surplus is projected at $57.3 billion in 2012. Social Security will continue to run a surplus through the end of the decade. As a result, the trust fund will grow from $2.74 trillion in 2012 to a peak of $3.06 trillion in 2020 (Table VI.F8 on p. 206).

Social Security is now running a “cash-flow deficit,” which means it is running a deficit if you exclude the $110.4 billion Social Security is earning in interest on trust fund assets (Table VI.F8 on p. 206). Social Security will begin running a deficit as it is commonly understood in 2021, when it begins drawing down the trust fund to help pay for the Baby Boomer retirement. This is perfectly natural: the trust fund was never supposed to grow indefinitely, but was meant to provide a cushion to help pay for the retirement of the large Baby Boom generation. That said, the Great Recession and weak recovery pushed up the date that Social Security will first begin to tap the trust fund to help pay for benefits.

If nothing is done to shore up the system’s finances, the trust fund will be exhausted in 2033, three years earlier than projected in last year’s report (p.3). When the trust fund is exhausted, current revenues will still be sufficient to pay 75 percent of promised benefits (p. 11). Even in this worst-case scenario, future benefits will be higher than current benefits in inflation-adjusted terms, but because wages are projected to rise over time, these benefit levels will replace a shrinking share of pre-retirement income.

The projected shortfall over the next 75 years is 2.67 percent of taxable payroll (0.96 percent of GDP). This is 0.44 percentage points larger than in last year’s report (p. 4 and Table VI.F4 on p. 197). Slightly more than one-tenth of the deterioration (0.5 percentage points) is due to the changing valuation period, and the rest is due to updated data and near-term projections and changes in longer-term assumptions.